Taking into account existing evidence on the accuracy of administrative data on student learning levels in India, Singh and Ahluwalia discuss why a reliable system of student assessment matters; fixing the quality of assessment data is a step towards preventing a vicious cycle of mediocrity in the Indian education system. They highlight how independent third-party evaluation, and the use of technology and advanced data forensics can prevent misrepresentation of true learning levels.

It’s an often accepted adage that ‘what gets measured gets done’. In well-functioning education systems, assessments help in measuring the learning levels of students, diagnosing and correcting learning gaps, and helping students and systems deliver better learning over time. It is no surprise then, that the learning outcomes of students are regularly measured to analyse the progress of education systems around the world, including India. However, what if the measurement of learning itself is flawed in the first place?

At the national level, India reports the learning levels and progress of school-going students through the National Achievement Survey (NAS), a nationally representative examination which indicates whether the educational system is performing effectively.

NAS findings are used to compute indices such as the School Education Quality Index (SEQI), which ranks and offers feedback to states and union territories (UTs) on school education achievement. The NAS website states some of the critical use cases of this survey, which are that “NAS findings would help diagnose learning gaps of students and determine interventions required in education policies, teaching practices, and learning. Through its diagnostic report cards, NAS findings help in capacity building for teachers, and officials involved in the delivery of education. NAS 2021 would be a rich repository of evidence and data points furthering the scope of research and development.”

Reliably obtaining this information is a prerequisite, and the starting point for any type of course adjustment. However, studies have revealed limitations in the accuracy of NAS.

Existing research on NAS findings

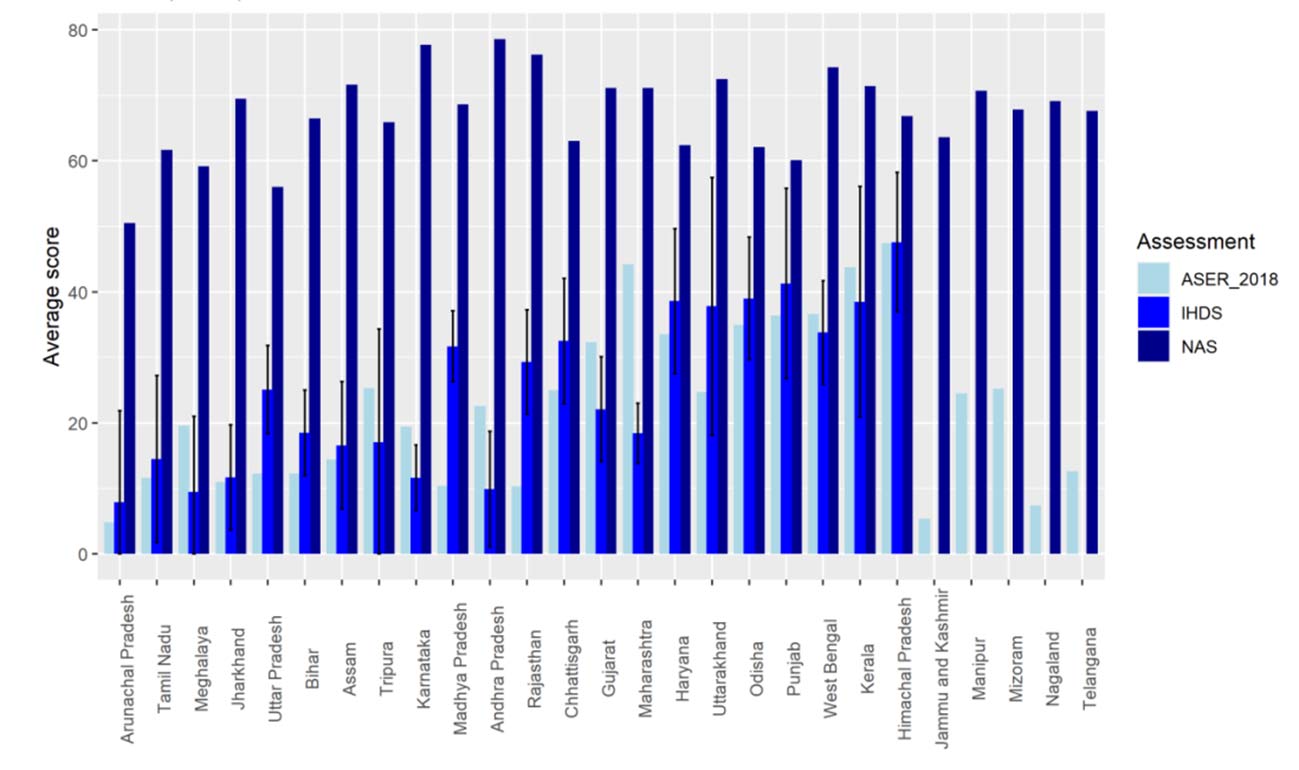

A recent study compares the learning outcomes from three nationally representative surveys – NAS, the Annual Status of Education Report (ASER) and the India Human Development Survey (IHDS) (Johnson and Parrado 2021). To ensure reliable comparison, it focusses on students, schools (rural, grade 3 students in government schools) and competencies (reading outcomes) which are included in all three surveys. The findings state that NAS state averages are significantly higher than ASER state averages and averages from IHDS, indicating a significant degree of inflation.

Figure 1. Average scores for the ASER, IHDS and NAS

Source: Johnson and Parrado 2021

Note: The bars on the IHDS show 95% confidence intervals. A 95% confidence interval, means that if you were to repeat the experiment over and over with new samples, 95% of the time the calculated confidence interval would contain the true effect.

These findings are not surprising. A basic process evaluation of NAS 2017 will reveal inherent flaws in its administration. Although at the national level, the examination was administered by the National Council of Educational and Research Training (NCERT) under the aegis of the Ministry of Education (formerly called Ministry of Human Resource Development), respective state governments were also involved in critical stages of its administration. The test was designed by NCERT – however, the translation was carried out at the state level (by the SCERT). Furthermore, the invigilation of the test in classrooms was carried out by Field Invigilators (FIs) who were District Institute of Education and Training (DIET) students, appointed by state officials. When states are being assessed and ranked on their education performance, whilst also being involved in sensitive processes such as paper translation and test invigilation, it is reasonable to conclude that the fundamental principles of separation of powers and fairness are not being respected. If the same body is the rule maker, executor and adjudicator, it could be perversely driven to project a fake picture that may not represent reality.

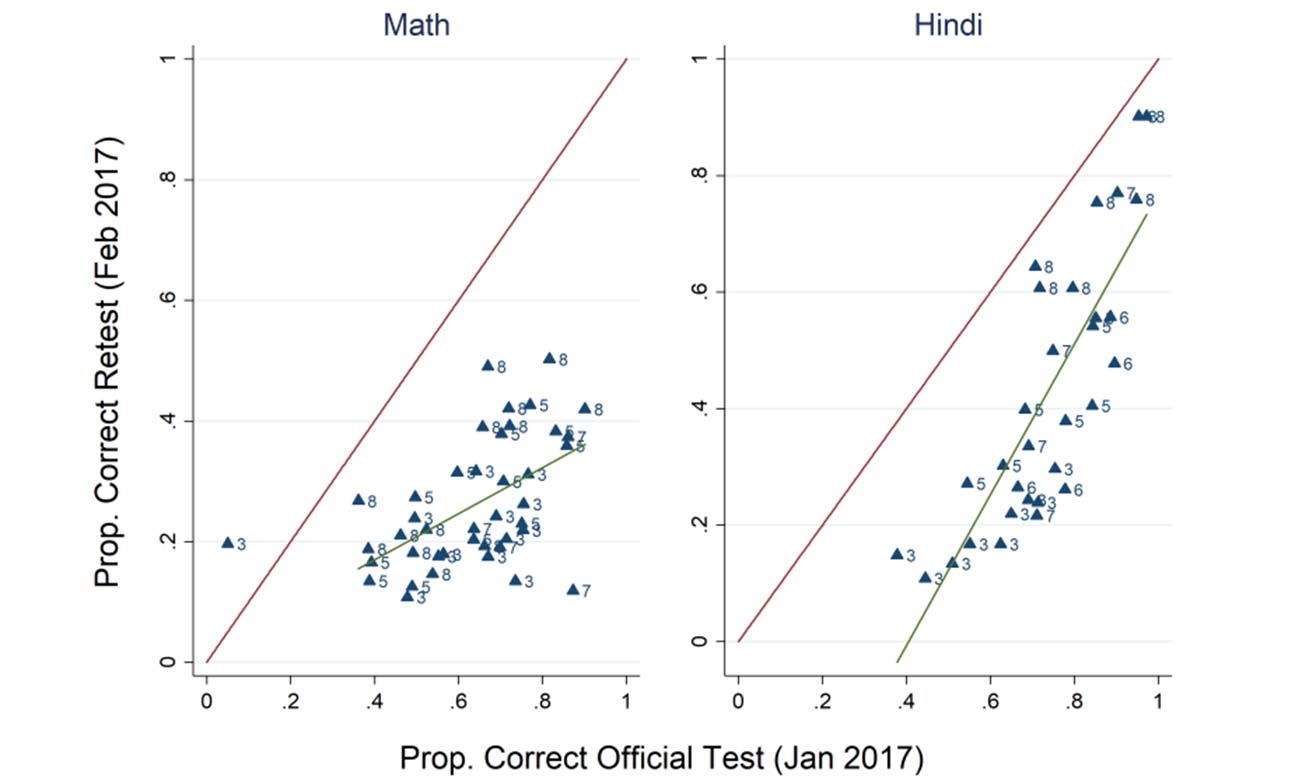

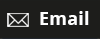

Another study evaluated Pratibha Parv, an annual standardised evaluation for all students from grades 1-8 in public schools in Madhya Pradesh (Singh 2020). This examination was also deemed as a ‘national best practice’ by NITI Aayog (NITI Aayog, 2016). The study found that student achievement as reported by teachers/school personnel in the official test was double that of their true achievement on the same questions when measured by independent researchers.

Figure 2. The proportion of correct responses in the official test and retest .

Source: Singh 2020

Note: If the proportion of correct answers in the official and independent tests was similar, all the dots would be along the red line, instead we see that the proportion answering correctly in the official test are much higher in both math and Hindi.

Why reliable assessment matters

An important premise to establish before digging further into this topic is why the problem of unreliable student performance matters.

A survey done by the Center for Global Development (CGD) of approximately 900 lower- and lower-middle-income country education bureaucrats, found that policymakers tend to have an overly optimistic perception of the learning levels of students. In the Indian context, this perception tends to be furthered by the persistent levels of inflation, which lead to indifference to the scale of the learning crisis. Girindre Beeharry, from the Bill and Melinda Gates Foundation (BMGF), lamented that “policymakers are not aware they have a problem to solve” (CGD, 2021). Collecting data unreliably does not translate into greater awareness of the problem. On the contrary, it paints a positive picture of the government, education machinery, teachers, and all other stakeholders, which they seek to justify and preserve, making it very difficult to effect change.

Therefore, unreliable data both perpetuates and exacerbates the learning crisis. One of the critical responsibilities of front-line bureaucracy (such as district and block education officers) is to regularly assess classroom teaching methods and student learning levels, and offer feedback to teachers on where and how to improve classroom practices. Poor data does not give them a reliable anchor to provide effective feedback. Moreover, since the system is ostensibly able to deliver good quality learning outcomes on paper, despite inadequate learning and teaching methods, there is little incentive for any stakeholder – students, teachers, bureaucrats or politicians – to improve on present practices.

By perpetuating the existing system with inept feedback loops, and subsequent scaling up of ineffective solutions, we are effectively setting up the state machinery to waste large sums of money without allowing the system to get better over time, thereby setting it up for a vicious cycle of mediocrity.

Changing the culture around assessments to achieve sustainable change

Before designing and scaling specific solutions, there are a few principles around the culture of assessments which are critical to consider to ensure long lasting sustainable change:

i) Lowering the stakes: Teachers, schools, students and parents often attach disproportionate stakes to most school assessments. Even examinations which are meant to be diagnostic (such as Pratibha Parv) are perceived as high stakes. Therefore, there needs to be clear communication by the state that the idea of student assessments and regular monitoring of systemic performance is not to penalise any concerned stakeholders, but to continuously track learning progress, diagnose the gaps in teaching and learning and set up corrective action.

It is equally important to ensure that not all student progress from different assessments is reported upwards to the state machinery, which might reduce the agency of teachers to course correct. For instance, student performance from formative assessments which are carried out regularly in classrooms, and are more decentralised in nature, should ideally be reported and tracked by teachers only at the classroom level.

ii) Reliable data as a salient outcome: Goodhart’s law states that ‘when a measure becomes a target, it ceases to be a good measure.’ A disproportionate focus on performance misaligns the incentives of stakeholders to get better results, even if it comes at the cost of cheating. This problem can be avoided by making the reliability and quality of the reported data as salient as the student performance.

In addition to celebrating top performers, schools and stakeholders should be incentivised to generate assessment data reliably irrespective of the performance levels, with the end goal of making it socially desirable for schools to report data accurately.

How do we get better data?

Broadly there are two major ways through which the reliability of student data gets compromised – the quality of the assessment, and the quality of assessment administration.

A good assessment must be valid (that is, measuring what it claims to measure), must have the ability to discriminate between students who understand the concepts well and those who do not, and produce the same results after repeated measurements. Without these core principles in place, it would not be possible to get an accurate diagnoses of students. State capacity to solve for these rather technical concerns is in short supply, but it must be built, perhaps by involving private assessment agencies to support the state in ‘learning by doing’.

In addition, the purpose of assessing students must be clearly defined. Assessments that monitor the overall health of the education system, summative assessments that measure learning at the end of the term, and formative assessments that provide regular feedback to students and parents are different in nature, scope and usage. The quality of the assessment’s administration also has a direct bearing on its reliability. Thorough process evaluation of the examination (of the scale of that done by the NAS) must be carried out to identify loopholes at every stage of the process. and stakeholder mapping (in terms of who designs the assessments, who administers and who evaluates) must be clearly thought out to ensure that the incentives of different stakeholders are not perversely affected. To establish fairness, test administration by independent stakeholders who are outside the state education system such as government departments other than education, local communities, and third-party assessment agencies can be explored, depending on the nature of the examination and budget availability.

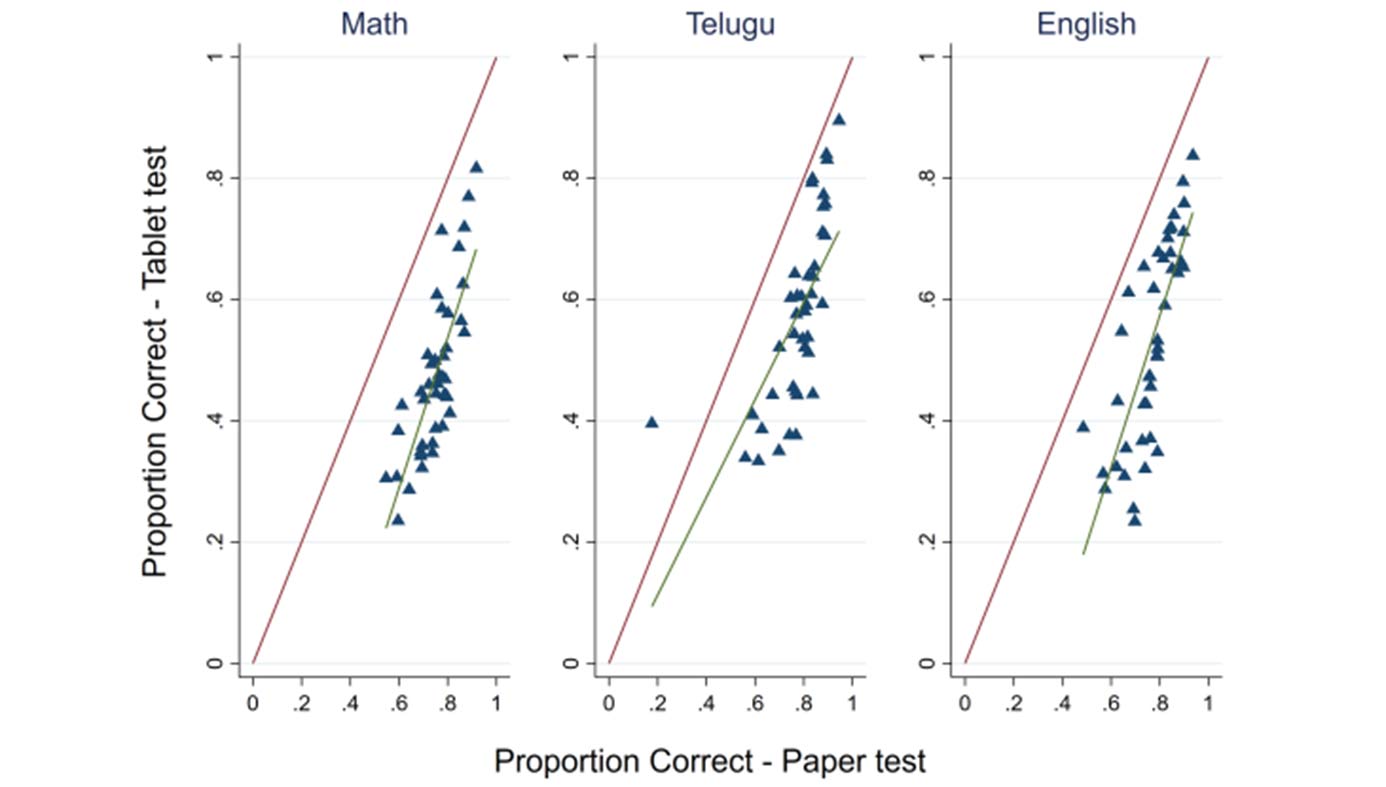

Use of technology and advanced data forensics in the past has also shown promise in multiple countries by improving the overall quality of the test administration. According to a study conducted in Andhra Pradesh, 20% inflation was reported in paper-based testing compared to grades obtained via tablet-based testing (Singh 2020). In Indonesia, the impact of Computer-Based Testing (CBT) on national exam scores in junior secondary school was evaluated, and it was found that test scores after the introduction of CBT dramatically declined by 5.2 points (Berkhout et al. 2020). Moreover, exam results rose again after two years, indicating that actual students' learning outcomes are improving as the number of opportunities to cheat decrease.

Figure 3. The proportion of students answering correctly in paper-based and tablet-base assessments

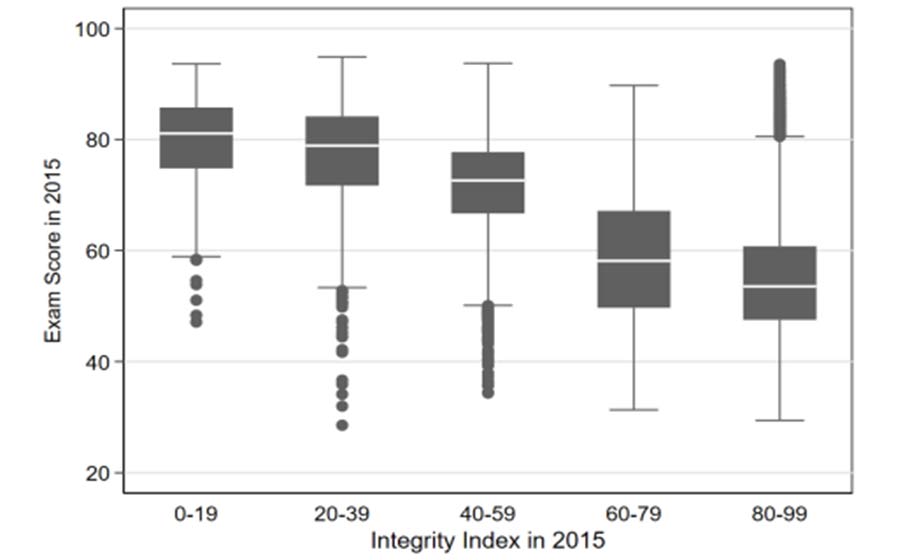

The Indonesian government also disseminated an ‘integrity index’ at the school level by identifying cheating through suspicious answer patterns. Figure 4 plots the integrity index against exam scores, showing that the lower the integrity, the higher the exam scores, while also indicating a high variance in the exam scores of schools with high integrity.

Figure 4. Integrity index of schools in Indonesia

Source: Berkhout et al. 2020

Note: The integrity index was used to flag the likelihood and scale of cheating. Answer patterns like identical answer strings or counter-intuitive performance on items of certain difficulty levels, such as scoring high on difficult items while low on the easier items reflected low integrity and vice versa.

Conclusion

It is tempting but ineffective to believe that we can obtain high-quality data by relying on personal morality and ethics. Officials respond to incentives, and thus it is critical to adjust the norms in which they perform their roles; we should aim to make better data reporting socially desirable. Sustaining such norm changes would also require constructing powerful and scalable systems capable of reliably conducting assessments which would make it possible to discover children’s true learning levels and subsequently improve them.

Further Reading

- Berkhout, E, M Pradhan, Rahmawati, D Suryadarma and A Swarnata (2020), ‘From Cheating to Learning: An Evaluation of Fraud Prevention on National Examinations in Indonesia’, RISE Working Paper 20/046.

- CGD (2021), ‘The Pathway to Progress on SDG 4: A Symposium A Collection of Essays’, Center for Global Development.

- Johnson, Doug and Andres Parrado (2021), “Assessing the Assessments: Taking Stock of Learning Outcomes Data in India”, International Journal of Educational Development, 84. Available here.

- NITI Aayog (2016), ‘Social Sector Service Delivery: Good Practice Resource Book’, NITI Aayog, Government of India.

- Singh, A (2020), ‘Myths of Official Measurement: Auditing and Improving Administrative Data in Developing Countries’, RISE Working Paper 20/042.

26 July, 2022

26 July, 2022

Comments will be held for moderation. Your contact information will not be made public.